Introduction

In Parts 1 & 2 of Shattered Silicon, I laid out some thoughts on the problems I feel we’re often overlooking in our relationship to - and reliance on - modern technology.

In Part 1, I discussed the Digital Divide and the risks involved in assuming that simplification is the right approach to making technology accessible to all.

In Part 2, I talked about ‘Broadcast Culture’; the threat of stagnation inherent in a world where the incentives encourage us to say more than we do, where our data is worth more than our works, and the consequences of a ‘free-to-play’ system.

In the third and final post in this series, I’ll talk about an issue which is, in large part, a symptom of those discussed in previous posts, but which we will need to tackle in its own right if we’re to ensure that people retain control over their own lives and have the agency and opportunity they deserve.

This post was originally much longer and covered a large swathe of issues, but, thankfully, I happened to procrastinate long enough for the universe to manifest a perfect illustration of the problem. Thank you, CrowdStrike!

A Skill Issue

Over the past few decades, we’ve seen countless iterations of hardware and software which have tended, broadly speaking, to hide the detail away from users and instead present a streamlined, user-friendly workflow for the task at hand. From writing documents to ordering online, we’ve seen continuous development geared towards making life easy for users and creators alike. This is a good thing, generally speaking: nobody wants things to be more difficult than they need to be, and hiding complexity or detail where appropriate is often the right thing to do, and is usually welcomed by users themselves.

That being said, I believe we should be mindful of the collective risks we take as a society when we foster the impression that simplicity is itself a goal to be pursued.

Given recent advancements in AI and hardware capabilities, and the ever-increasing reliance on systems which are - despite appearances - incredibly complex, I can’t help but feel that a threat may be looming on the horizon - and it’s not entirely clear what we can (or should) do about it.

The Rumsfeldian Rubicon

Though I held no great love for the late Donald Rumsfeld, he did provide us with a quote that has the useful property of being simultaneously insightful, memorable, and widely applicable:

Reports that say that something hasn't happened are always interesting to me, because as we know, there are known knowns; there are things we know we know. We also know there are known unknowns; that is to say we know there are some things we do not know. But there are also unknown unknowns—the ones we don't know we don't know. And if one looks throughout the history of our country and other free countries, it is the latter category that tends to be the difficult ones.

- Donald Rumsfeld, February 12, 2002

Though Rumsfeld was talking in the context of military threats, the basic premise underpinning his statement originates from the world of psychology, in the work of Joseph Luft and Harrington Ingham and their development of the Johari Window.

To apply the basic premise to the technological world, we can make a few simple observations:

Known Knowns

There exists a vast and ever-growing sea of ‘things that can be known’.

‘I know that computer viruses exist, and I know how to defend against them’.

Known Unknowns

It’s impossible to know everything, but you can keep abreast of things you should know, and take reasonable actions based on advice

‘I’ve heard about computer viruses, but I don’t really understand them or know what I should do about them, so I’ll install an antivirus to be safe’.

Unknown Unknowns

When you don’t know that you don’t know something, you may not even have the language to articulate your problem.

‘My computer’s acting strange’.

To this list, I feel we should add one more, in order to complete the set for the current reality of our technological world:

Unknown Knowns

When you know something relevant about a problem, but you misunderstand the implications or detail

- or -

When you know somebody else knows something, and you rely on them to take action on your behalf

- or -

False confidence‘I know about computer viruses, and my employer installed an antivirus on my computer, so I’ll have no problems’

Of the four possibilities outlined above, it’s the ‘unknown knowns’ - those factors, threats, and problems that we’re ambiently aware of, but which we’ve acquired some learned helplessness about - that may be the most serious threat.

CrowdStrike

I began writing this post an embarrassingly long time ago, and ended up semi-abandoning it as a series of irritating challenges befell me: a medical issue with my eyes which made it difficult to look at screens and to read or write, a prolonged period with a lack of free time, and finally, the unsanctioned and frankly rude decision of my laptop to undergo an enormous hardware failure and transform itself, in an instant, into an expensive paperweight.

Thankfully, I subscribe to the view that good things come to those who wait, and on July 19th, 2024, CrowdStrike - a security provider to businesses all over the planet - brought the world to its knees and gave me an opportunity to delete almost the entirety of this post, and instead point out the fundamental takeaway: we should know how stuff works.

Though the CrowdStrike issue was unlikely to have affected people’s personal machines, it caused chaos for many businesses and organisations who rely on the company’s software, with Microsoft estimating that 8.5 million devices were affected (I suspect this number is actually pretty conservative, but we’ll probably never know the true figures).

Though there were very dramatic and highly visible impacts on some businesses - banks, airlines, and healthcare services to name a few - my real interest in this story relates to the resolution to the problem, rather than the problem itself.

To summarise - CrowdStrike pushed out an update which contained a single bad / corrupt / incorrect file (the real specifics of how and why this file ended up on people’s machines may remain a mystery unless CrowdStrike is compelled to disclose fairly forensic data), that caused their software, running in a privileged context on the host machine, to crash during startup, taking the operating system with it. The Register recently provided a fairly concise overview of the problem if you’re interested in learning more. Ultimately, the cause doesn’t much matter for this post: CrowdStrike took 8.5 million computers offline and the only surefire way to fix it was with physical access to the machine.

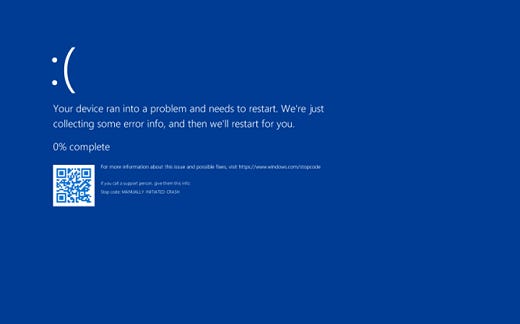

If you are an average person, working from home on a company machine, this is where everything falls apart. You wake up, go through your morning routine, and get yourself set up to start your work-day, only to be confronted with the dreaded Blue Screen Of Death:

No amount of restarting makes the problem go away, so you call into work, where, in the best-case scenario, your understaffed and overwhelmed IT department is now scrambling to identify the cause of the issue and guide staff one-by-one through Microsoft’s 12-step recovery process, which involves communicating things like ‘Safe Mode’, ‘Bitlocker Recovery Key’, and ‘Terminal’ to normal human beings who, by and large, don’t know or even care to know what any of those things mean.

In the worst-case scenario, you’re forbidden from even attempting to fix the problem yourself, and must either bring the machine to somebody who is authorised to fix it, wait for somebody to come out to your home to fix it, or box it up and post the machine off to the IT elves.

Though it would be extraordinarily unfortunate for the CrowdStrike problem to happen again any time soon, one major factor contributing to the severity and reach of the outage was (and remains, for now at least) the mandated use of specific security software to protect corporate assets.

Though there is value in securing all of your assets in the same way, there is also a cost: doing so means that they all implicitly share the exact same weaknesses and vulnerabilities.

I’m not, of course, suggesting that corporate policy should change to introduce a confusing new mixture of mandated security software, or that it should be left up to employees to secure their own machines however they see fit, but that we must understand the risks inherent in the system if we wish to avoid catastrophe.

It’s very difficult to imagine a way to avoid problems like this occurring in the future: software is written by humans, mistakes happen, validation processes fail, and the specific nature of cybersecurity threats necessitates a degree of privilege and trust in security software which leaves you open to a certain amount of risk. It’s a trade-off: choose your poison.

Managing Risk

The best way, in my view, to mitigate these risks is for end-users to learn about the machines and systems they use. Understanding a few basic concepts and approaches to recovering after a failure can help reduce the impact of many potentially serious problems.

In the same way that companies now provide - and in many cases require - training around inter-personal behaviour and health & safety, I feel that there’s a strong argument to be made for training around best technology practices and emergency IT problem resolution, especially in the modern era where remote working is so common and physical access to machines is so much more difficult for IT professionals.

On a more fundamental level, we should perhaps consider whether ‘company-controlled hardware’ is the best practice to follow when significant parts of the workforce are now remote.

From the perspective of employers - it might feel like it’s in their best interests to retain control and ownership of remote hardware, but as we’ve just seen; it can be an expensive and debilitating risk when things go wrong.

From the perspective of employees - it’s obviously cheaper to let the company provide the hardware and absorb the costs of maintenance & security, but a prolonged outage preventing you from working can raise other issues: if the company takes a significant hit, and is forced to reduce headcount, you may be exposed to a real threat to your livelihood.

There are no easy answers - it’s a difficult problem and one that constantly evolves as new threats are identified. It’s undeniable, however, that a workforce decently trained in IT & security fundamentals would probably withstand widespread disruption much more effectively.

The temptation may be to rely on the hope that providers like CrowdStrike simply ‘fix the problem’ and ‘put processes in place’ to avoid it happening again, but the simple reality is this: it will happen again sooner or later, and the more centralised and dependent we make ourselves on technological solutions to technological problems, the worse the fallout will be. The more we allow our learned helplessness to be exploited as an opportunity for new products and services promising yet more ‘simplicity’ and unattainable security, the more we hand over control to others in the hope that they never make mistakes. Reader, I assure you: mistakes will be made!

For many of us, technology is the tool of our trade - and for all of us, technology is enveloping our homes, schools, workplaces, governments, and essentially every institution we might interact with, and rely upon, in our daily lives.

We should treat the CrowdStrike failure as a warning: these things will happen, and the broader the reach of technology into our lives, the worse it will be. The best defence is to be prepared; to learn about the hardware and software we use so that when something does go wrong, we know how to identify the problem, how to communicate with one another about it, and how to resolve it. At the very least, we should arm ourselves with the language to be able to seek help or advise others on how to help themselves.

I’m not a security expert - and I don’t pretend to understand all of the many ways in which services like those CrowdStrike provide do actually help to prevent a litany of potential disasters - but I do know technology well enough to know that an overreliance on a single point of failure is not a good idea. Diversification of the systems, services, and practices we use can at the very least help to avoid universal failure: it’s worth remembering that this particular outage was not caused by a virus or (as far as we know) some malicious actor, but a bug in the very software millions are relying on to prevent such a disaster in the first place.

At the risk of raising the hackles of the most ardent capitalists amongst us: perhaps the time has arrived when we should seriously consider the idea that people should own and be responsible for the tools they rely on to produce their value. It may be an expensive proposition, but the time is rapidly approaching when many people’s livelihoods are intrinsically tied to the use of technology they don’t own, don’t control, don’t understand, and are forbidden from fixing. This, to me, seems like an untenable risk. In a world where working from home is rapidly being normalised, where people work several jobs at once, and where the ‘gig economy’ is growing and reshaping the workforce, it seems only fitting to me that the idea that workers should own the means of production should see something of a revitalisation.

We’re All Old

The breakneck pace of technological advancement means that knowledge and skills must constantly evolve to keep pace. The stereotype that ‘old people can’t use computers’ is no longer relevant: today, almost nobody can really use computers, even though they rely on them for everything - and the problem is only getting worse.

Jason Thor Hall from PirateSoftware provided a short but illuminating anecdote about his experience at a Minecraft convention, where he discovered that a huge number of young people, at a videogame convention, didn’t understand what a keyboard, mouse, or game controller was:

Though it may be unfair to extrapolate from the behaviour and technological skillsets of children, I’ve seen enough evidence with my own eyes that the endless drive for simplicity, the ‘iPad-ification’ of technology, and the predominance of ‘trivial’ technological usage is resulting in a situation where huge numbers of people become helpless when confronted with anything beyond business-as-usual.

We’re all old now - technology has reached deep into all of our lives, whether we wanted it or not, and even an adept user’s skills and knowledge can rapidly become outdated or obsolete if they don’t take active steps to remain engaged and to maintain their capabilities.

In a world where even the software we rely on to keep us secure can itself cause a catastrophic global outage, we owe it to ourselves and our future to ensure that we don’t allow ignorance to leave us helpless in the shadow of the singularity.

Related Reading

Strongly related to the idea that we should learn how stuff works, is the idea that you should at least have the right to fix stuff when it breaks. You might like to subscribe to Fight to Repair, a newsletter which covers the ongoing battle to secure consumer and user rights to repair the stuff we use every day.

Many thanks Tom. Great to hear from you.

Isolated events such as CrowdStrike are not inevitable when all they had to do is apply the patch to a sheep-dip Windows box before inflicting it on the rest of the world. I found the succession of tech sector apologists for CrowdStrike's ineptitude entirely unconvincing!

Perhaps the patch was coded and released by GenAI, or the Old school no-tolerance approach to coding QA and testing is being replaced by "acceptable risk" laissez faire...!?🤖😬